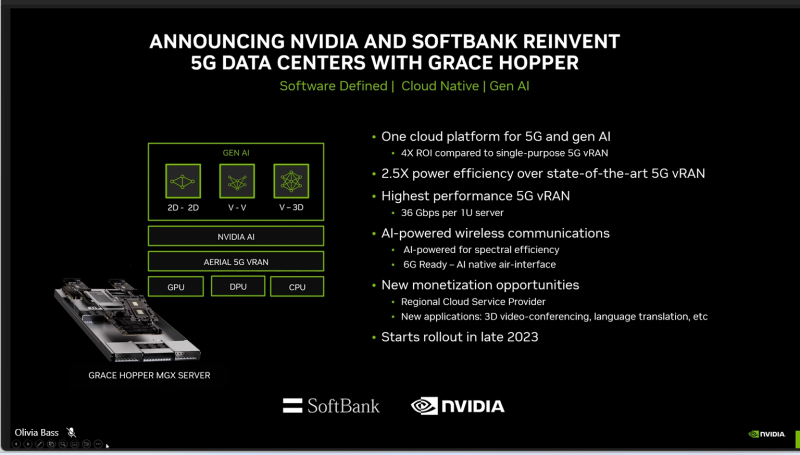

Boosted by intense investor interest in artificial intelligence (AI), Nvidia is selling its latest GH200 Grace Hopper data center chip as a way to build generative AI data centers that can also run 5G.

The company, which just hit a market cap of $1 trillion this week, largely thanks to the hype around AI, is dead set on riding that gravy train way down the line. Now, it's talking about its chips accelerating AI, rather than just boring old 5G.

“5G now runs as a software-defined workload in an AI factory,” Ronnie Vasishta, SVP of telecom at Nvidia, said on a call about the new chip.

SoftBank has signed up to use the Grace Hopper chips with Nvidia’s MGX architecture to roll out new distributed AI data centers across Japan late this year.

Nvidia is keen to highlight its “software-defined acceleration” with the GPU as a way of handling multiple workloads, from layer 1 virtual radio access network (vRAN) acceleration to AI tasks. “We have been able to show that within one accelerator, you’re able to run both intelligent video analytics using AI and RAN,” Vasishta commented.

The well-favored chip designer said that being able to handle multiple workloads with GPU software acceleration sets its apart from its rivals in the industry.

Like Nvidia, Marvell and Qualcomm are also focusing on inline accelerators for boosting vRAN performance. While the virtual king of the cloud-RAN arena right now, Intel, prefers lookaside and integrated accelerators to gin up its RAN results.

Do you want to learn more about the cloud-native 5G market? Sign up to attend our virtual Cloud-Native 5G Summit today.